Today marks the first day of the Report Stage of the Online Safety Bill. As this Bill progresses through the Houses of Parliament, we hope to (once again) raise the alarm around the risks to encryption posed by this Bill.

As ever, our concern is around chapter 5 of the Bill, particularly clause 111 (formerly clause 110, before changes in the House of Lords). This is a key section as it defines the approach to notices to deal with terrorism and CSEA content. As it stands, clause 111 of this section leaves the door open for providers to be forced to embed some form of third party scanning software to identify potentially illegal content in private communications.

Ideally, this section would introduce a guarantee that this type of notice would not be imposed on providers of end-to-end encrypted communications. Instead it relies on questionable concepts such as “accredited technology” to wistfully wave some form of assurance that ‘this sort of thing’ is technically possible and it’ll all be fine, secure and appropriate.

Be under no illusion; “accredited technology” is no more reassuring than a lock which can be opened by a skeleton key.

It’s this aspect of the Online Safety Bill - the potential for undermining of end-to-end encryption - that we refute as completely flawed. It is not possible to have both secure communications and blanket surveillance, in the same way you cannot “have your cake and eat it.”

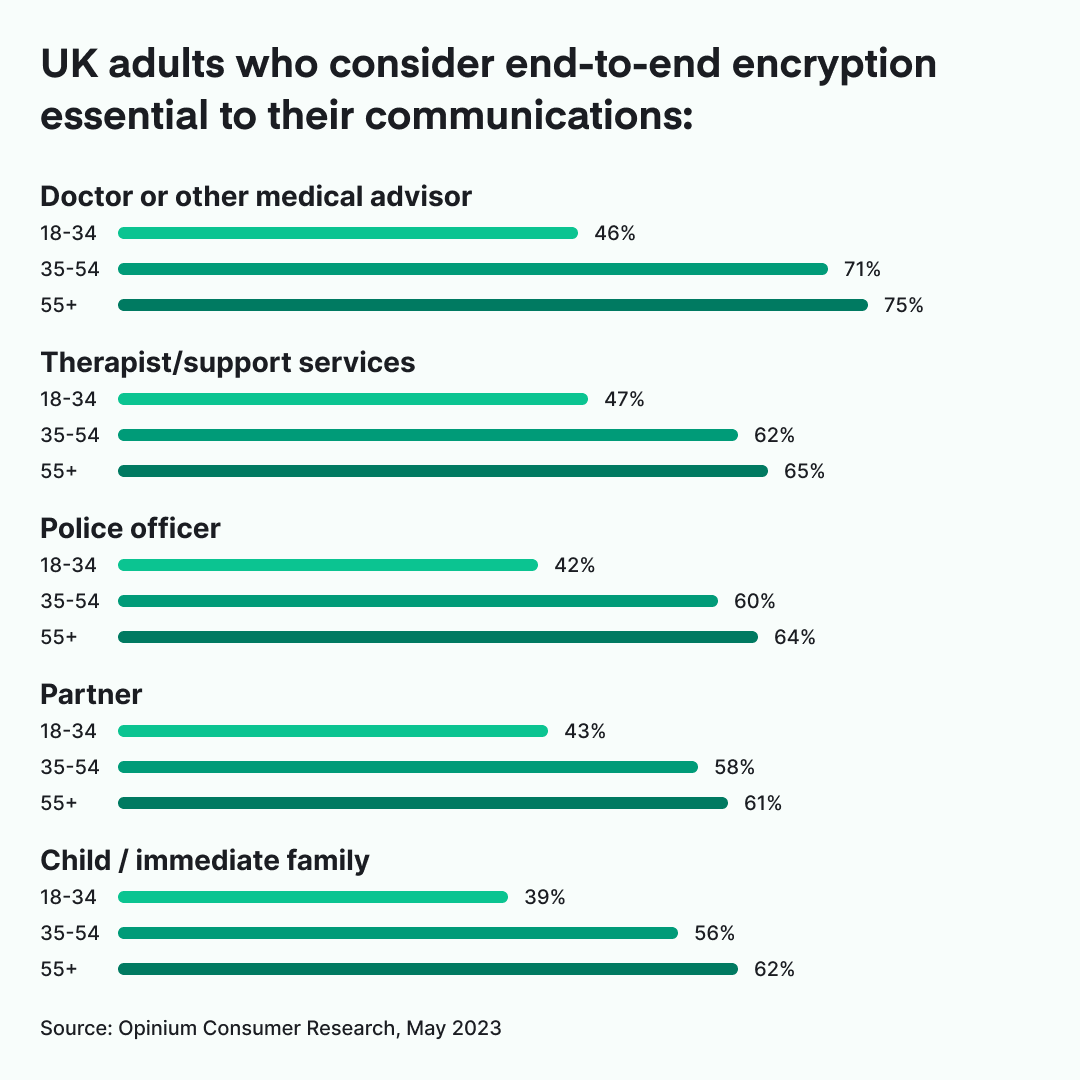

It’s also out of touch with what people want. Research by Opinium, based on a survey of 2,000 politically and nationally representative UK citizens, shows widespread consumer support for retaining the privacy of our online conversations with end-to-end encryption technology.

83% of UK citizens believe personal conversations on messaging apps such as Element, WhatsApp or Signal should have the highest level of security and privacy possible.

It’s also notable that private conversations are more important to women than men, and that the appetite for privacy increases with age. For a piece of legislation that’s been so aggressively positioned to appeal to certain types of voters, it’s perhaps an eye-opening reminder to the government that people take their privacy seriously. It turns out that end-to-end encryption is the ‘will of the British people.’

That the Online Safety Bill fails to deliver should not be much of a surprise.

As succinctly summarised by The Verge, the original Online Harms white paper “was introduced four prime ministers and five digital ministers ago” during which time it has “ballooned in size — when the first draft of the Bill was presented to Parliament, it was “just” 145 pages long, but by this year, it had almost doubled to 262 pages.” Just in this session of the House of Lords there’s more than 300 amendments being discussed - we dread to think what the Bill will look like once we reach the end of this stage.

The legislation is, quite simply, a confused overreaching hotchpotch of wishful thinking.

With regards to end-to-end encryption, the principal idea is that the government expects tech companies to create their own tools for scanning and reporting abusive content - or to trust a future (unspecified) magical third-party solution based on client side scanning. Countless experts have highlighted this is impossible.

Our key concerns are:

- The requirements for this type of technology are impossible to achieve without fatally undermining end-to-end encryption. Plain and simple.

- Ofcom’s codes of practice on how these notices will be used will only be defined in detail after the bill has passed (albeit within the framework defined by clause 112).

- As it stands, any form of scanning introduces a weakness on end-to-end encrypted products, creating a massive target on either the companies deploying their only tooling or the “accredited” third-parties. This looks set to be a jackpot for those seeking to access and abuse communications.

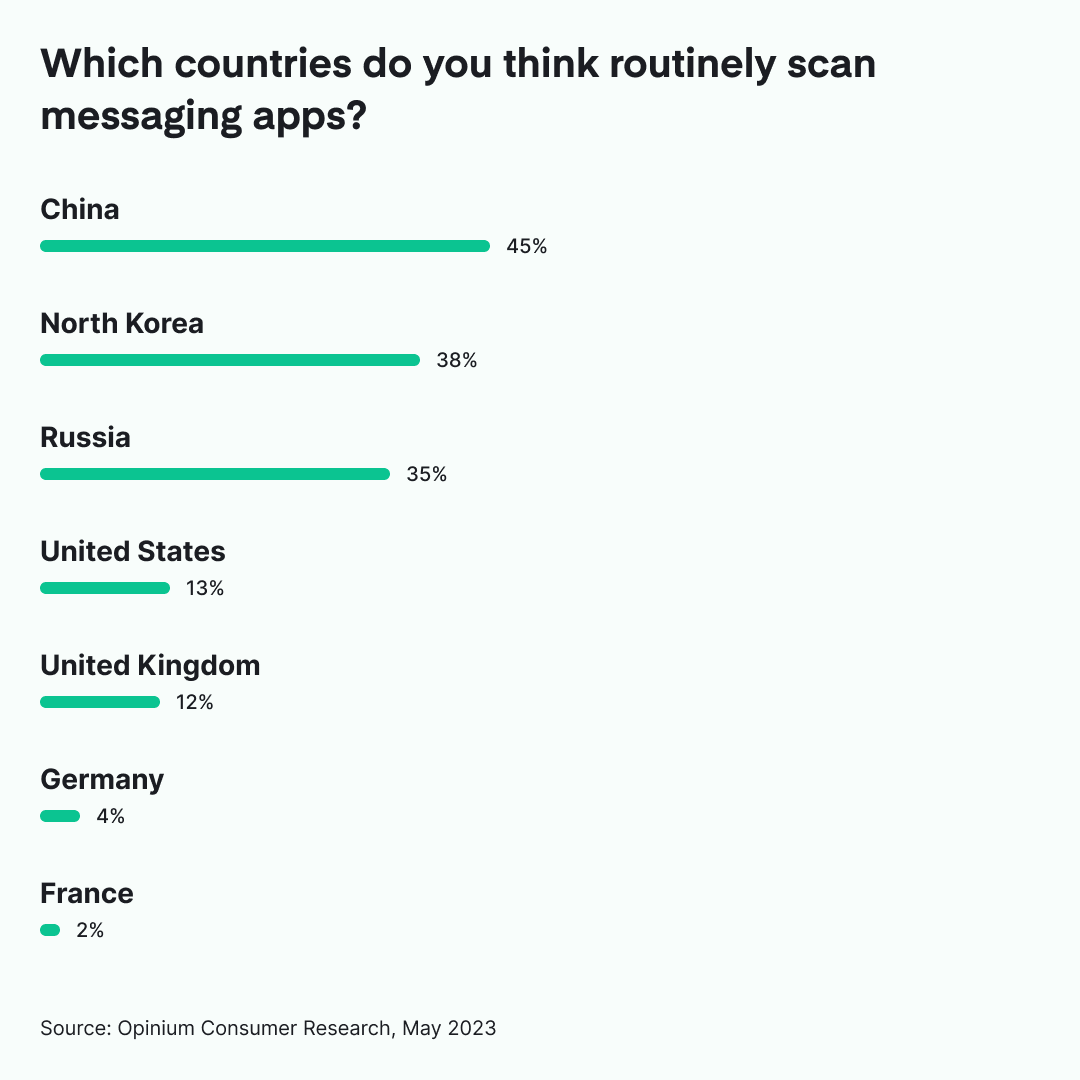

- There is an expectation that global citizens using products that might also be used in the UK will be amenable to all of their conversations being scanned.

- All current “artificially intelligent” scanning solutions are intrinsically unreliable, prone to hallucinations and limited. Yet, we should just trust that a) AI technology will quickly mature and b) will be developed responsibly, without any forms of bias or false positives.

- The potential consequences of hooking up an artificial intelligence tool to rampage through the nation’s private conversations are simply chilling.

To recap, a major legislative change involving a wonderful but unspecified solution. You could be forgiven for being a little sceptical about these Sunlit Uplands.

At times tech firms defending end-to-end encryption are accused of being obstinate. But a sensible debate is impossible when there’s absolutely no detail of a proposed technical solution. Instead, we keep hearing again and again that a solution can be invented; as if it’s just a matter of clicking our fingers and being done with it.

Fact is, it’s not possible. Especially when the solution would be a contradiction in terms - you cannot scan encrypted content for abuse without fundamentally undermining the privacy of encryption for everybody. We’re literally expected to build the tools that will undermine the security of our platform.

Turning the ‘10’ on the door of 10 Downing Street into a string of binary doesn’t make the government a tech expert. Indeed, it smacks of the polar opposite.

Design for future threats

The best secure, privacy-protecting technology is designed to be future proof in the face of a darker times; not something to be undermined by a regulator that’s run out of ideas for enforcement. History shows that designing purely for the present is negligent in the extreme. Eras pass, situations change and threats evolve. Russia’s invasion of Ukraine is perhaps the most recent reminder of how quickly life can change.

Privacy is a human right in benign times, and crucial when facing oppression. A tech product that provides security and protects privacy needs to stand the test of time. The best privacy-respecting products ensure that even the provider itself cannot betray trust. To enable blanket third party access is a dereliction of duty, in both the immediate and future terms.

Time again society sees existing systems extended and abused for purposes beyond their initial creation.

Mass surveillance won’t address child abuse

It goes without saying that child abuse is abhorrent. It’s a horrific crime and every effort should be made to stop it.

Undermining end-to-end encryption, however, will not have any impact on addressing child abuse - and here’s why:

- Offenders and those that want to cause harm will just use unregulated apps, a behaviour already found in the past through law enforcement investigations.

- More investment in specialised and tech savvy law enforcement agents, trained to identify, investigate and infiltrate CSAM distribution rings and bring accountability to actual perpetrators are required. Child abusers, terrorists and other bad actors have to operate in the open in order to recruit. This is where law enforcement should focus.

- The most effective way to address such abuse is by empowering and informing young people, parents and educators, be it in schools or public information campaigns. The New Zealand government’s Keep it Real campaign is a shining example of a mature approach to a real human problem, providing actual solutions instead of delegating responsibility to tech companies.

- Beyond the grooming of children to send illegal photos, child abuse is a human crime that is perpetrated in the physical world. It is certainly not a problem exclusive to the digital world, nor encrypted spaces.

- Of course reports alone do not fix this issue, so we actually need to see the agents of the law enforcing the already existing laws on child abuse in both the physical and online world.

- Children themselves need to be heard and believed. A report by the Independent Inquiry into Child Sexual Abuse has found many instances of failures to investigate allegations of abuse “due to assumptions about the credibility of the child." This left already vulnerable children, particularly those in care, in even more risk as they were often considered to be “less worthy of belief.” Additionally, only an estimated 7% of victims and survivors contacted the police at the time of the offence. Not only that, we’re seeing a decline of convictions by around 25%. Perhaps it is time to address these real life issues, before undermining the privacy and security of communications of an entire nation?

Focus on what actually matters

One of the most sensible voices in this debate is Ciaran Martin, former head of the UK’s National Cyber Security Centre (NCSC). As he highlights in Prospect, the Online Safety Bill is a “cakeist” policy in which the government is “searching for the digital equivalent of alchemy.”

His prediction is that the Online Safety Bill, complete with its undermining of end-to-end encryption, will be forced through but never - in practice - implemented; not least because it will trigger the likes of Element, WhatsApp and Signal to pull their products from the UK.

So if the government is serious about addressing child abuse, it is surely better for it to focus on the real world and investment in education, the criminal justice system and social care.

But perhaps therein lies the real truth. Maybe child abuse is not a fig leaf to introduce mass surveillance, as many speculate. Perhaps, in actual fact, posturing on end-to-end encryption is the fig leaf for a lack of investment in education, policing and social care. After all, talk is cheap.